Securing the Age of AI: A Review of McKinsey’s “The Cybersecurity Provider’s Next Opportunity: Making AI Safer”

Executive Summary

- Growing Attack Surface: AI-driven systems introduce new vulnerabilities, from data pipelines to model training processes, requiring cybersecurity to expand beyond traditional IT boundaries.

- Continuous Monitoring: Proactive, ongoing security practices can help organizations stay ahead of evolving AI threats and vulnerabilities.

- Regulatory Pressures: Emerging frameworks—like the EU AI Act, GDPR, and CCPA—underscore the need for compliant AI solutions and privacy protections.

- Collaboration & Transparency: Collaboration among cybersecurity professionals, AI developers, and business stakeholders fosters shared knowledge and more resilient defenses.

- Talent & Skills: Upskilling cybersecurity teams in AI-related risks and tools is essential for holistic protection.

These points highlight how organizations can align established cybersecurity frameworks—such as the NIST AI Risk Management Framework (AI RMF) or ISO/IEC 27001—with AI-centric security measures. By integrating robust defenses into every stage of AI development and deployment, businesses can unlock AI’s potential while minimizing risk.

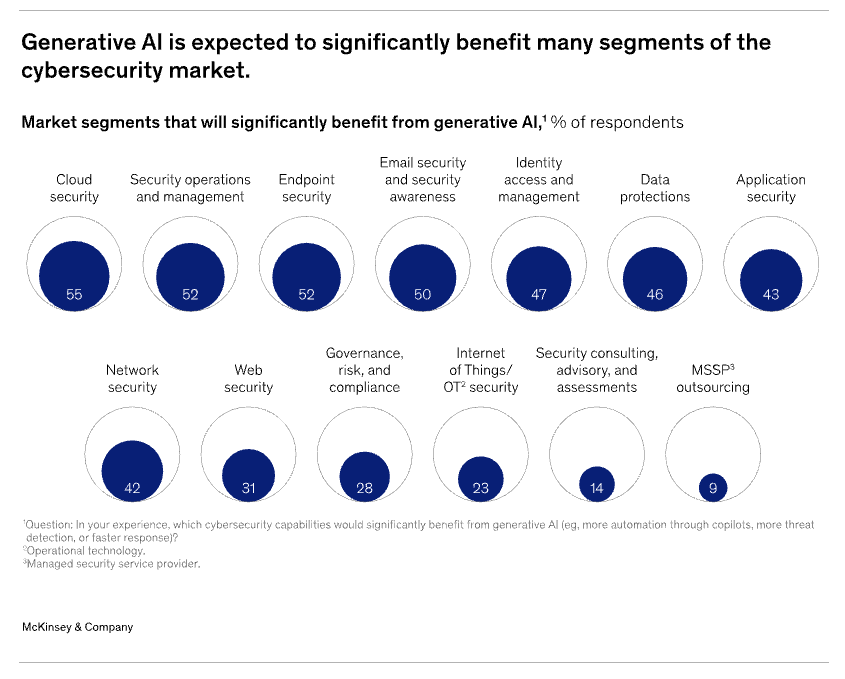

Artificial intelligence (AI) has rapidly moved from a niche technology to a fundamental driver of innovation across nearly every industry. However, with the growing reliance on AI comes an equally urgent need to secure these systems from cyberthreats. McKinsey’s article, “The Cybersecurity Provider’s Next Opportunity: Making AI Safer,” shines a spotlight on how cybersecurity providers can seize the moment to develop safer AI environments for their clients.

Key Takeaways

AI’s Expanding Attack Surface

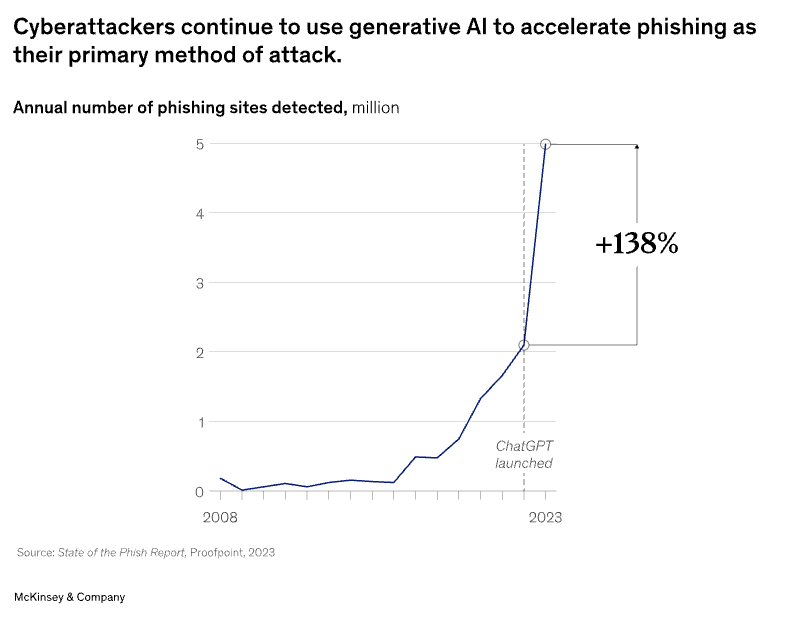

As organizations adopt AI at scale, the potential attack surface grows significantly. Everything from data-gathering pipelines to AI models can be exploited by malicious actors. McKinsey emphasizes that cybersecurity must broaden its scope to encompass not only traditional IT infrastructure but also the complex workflows that generate and train AI models.

Proactive, Continuous Monitoring

McKinsey’s article underlines the importance of continuous, proactive security. Rather than dealing with each new vulnerability as it arises, AI security strategies should involve active monitoring, dynamic defense mechanisms, and the agility to adapt as threats evolve.

Regulatory Pressures and Compliance

Regulatory bodies around the world are paying closer attention to AI’s ethical and security implications. Cybersecurity providers that can demonstrate compliance frameworks and offer solutions built around robust privacy and data-management controls stand to gain a competitive advantage.

- EU AI Act (proposed) focuses on categorizing AI systems based on risk levels.

- Data Privacy Regulations (e.g., GDPR in Europe or CCPA in California) place strict limitations on how data—especially sensitive data—should be handled during AI training.

Collaboration and Transparency

McKinsey highlights the need for more open forums, collaborative coalitions, and information sharing between cybersecurity experts, AI developers, and relevant stakeholders. This culture of transparency can help organizations preempt emerging threats and respond more effectively to security incidents.

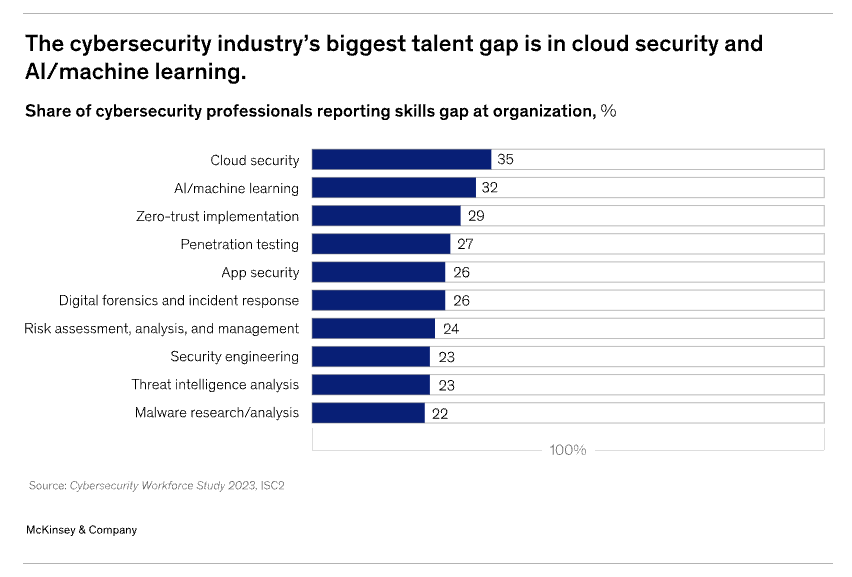

Upskilling Cybersecurity Teams

Many cybersecurity teams lack the specialized AI knowledge to identify and mitigate AI-specific threats. McKinsey suggests that upskilling current cybersecurity professionals—or hiring specialized talent—will be critical to ensure a fully informed and capable workforce.

Implications for Organizations

Organizations seeking to implement AI at scale must develop a robust strategy that blends leading-edge cybersecurity practices with AI-specific safeguards:

- Adopt an AI-centric risk assessment model: Rather than applying generic cybersecurity frameworks, modify them to incorporate AI’s unique vulnerabilities (e.g., adversarial inputs, data poisoning, and model evasion).

- Relevant Reference: The NIST AI Risk Management Framework (AI RMF) offers guidance on identifying, assessing, and mitigating AI risks.

- Invest in long-term talent development: Align your workforce strategies with emerging AI-security demands. This includes in-house AI security training, external certifications, or hiring data scientists who understand both AI development and cybersecurity fundamentals.

- Partner with security vendors offering AI solutions: Look for cybersecurity providers that not only sell AI security products but also have deep expertise in implementing and managing AI-driven defenses.

- Relevant Reference: ISO/IEC 27001 remains a mainstay for information security management, and it can be extended to AI workflows with additional risk assessments.

Additional Resources and References

- McKinsey & Company. “The Cybersecurity Provider’s Next Opportunity: Making AI Safer.” McKinsey (2023). Link

- National Institute of Standards and Technology (NIST). AI Risk Management Framework (AI RMF) (2023)

- ISO/IEC. ISO/IEC 27001 – Information Security Management

- NIST. Cybersecurity Framework (CSF)

- European Commission. Proposal for the Regulation of AI (EU AI Act)

Conclusion

McKinsey’s article is a timely reminder that as AI advances, so do the threats aiming to undermine it. Cybersecurity providers now have a pivotal role not just in protecting data and systems, but in ensuring that AI development and deployment proceed responsibly and securely. By extending traditional cybersecurity strategies into this new AI frontier—and by collaborating, upskilling teams, and embracing continuous monitoring—organizations can confidently harness the transformative power of AI without compromising security.